Busy software, that frustrating lag and spinny wheel of doom, is something every user has encountered. We’re diving deep into what makes software sluggish, from poorly written code to network hiccups, and how to fix it. This isn’t just about technical details; we’ll explore how slow software impacts the user experience, from minor annoyances to major productivity killers.

Get ready to optimize your digital life!

This exploration will cover the characteristics of “busy” software, examining common causes ranging from inefficient algorithms to external factors like network latency. We’ll delve into practical methods for measuring and optimizing software performance, including strategies for improving code efficiency and designing user interfaces that effectively communicate progress during intensive operations. The impact on user experience will be a central theme, along with architectural considerations and effective testing methodologies.

Finally, we’ll examine real-world case studies, illustrating the process of diagnosing and resolving performance bottlenecks.

Defining “Busy Software”

“Busy software” refers to applications that consume significant system resources, leading to noticeable performance degradation. This isn’t just about slow loading times; it encompasses a broader impact on the user experience and the overall system’s responsiveness. Think sluggish interactions, freezing, and an overall frustrating user journey.Software can become “busy” due to inefficient coding, poorly optimized algorithms, or simply handling a large volume of data or complex tasks.

The impact can range from minor annoyances to complete system crashes, depending on the severity of resource consumption and the system’s capabilities.

Characteristics of Busy Software

Busy software typically exhibits several key characteristics. High CPU usage is a common indicator, as is excessive memory consumption. The application might frequently access the hard drive, leading to noticeable delays and potentially impacting other running processes. Furthermore, a noticeable increase in network activity can also point towards a resource-intensive application. These indicators often manifest as a slow, unresponsive interface, making it difficult for users to perform tasks efficiently.

Examples of Resource-Intensive Applications

Many applications, especially those dealing with large datasets or complex calculations, can become resource hogs. Video editing software, like Adobe Premiere Pro or DaVinci Resolve, often requires significant processing power and RAM. 3D modeling and rendering software, such as Blender or Autodesk Maya, are notorious for their high CPU and GPU demands. Games, particularly modern AAA titles, are also known to consume substantial system resources.

Finally, large database applications or enterprise resource planning (ERP) systems can strain server resources under heavy load.

User Experience Implications of Busy Software

The user experience of interacting with busy software is often characterized by frustration and inefficiency. Slow response times to user input, such as clicks or keystrokes, can lead to a feeling of sluggishness and interruption of workflow. Frequent freezes or crashes disrupt the user’s task and can lead to data loss. The overall effect is a negative user experience, impacting productivity and satisfaction.

Users may become impatient, resorting to workarounds or abandoning the application altogether. This can negatively impact user adoption and retention.

Causes of Software Busyness

Software busyness, that frustrating feeling of your application grinding to a halt, isn’t just a matter of bad luck. It’s often the result of specific design choices and external factors that conspire to create a less-than-ideal user experience. Understanding these causes is the first step toward building snappier, more responsive software.Poorly written code and inefficient algorithms are major culprits in slowing down applications.

External factors, like network connectivity issues, also contribute significantly to the perception of software busyness. Let’s dive into the details.

Inefficient Programming Practices

Many common programming practices, if not carefully considered, can lead to significant performance bottlenecks. For example, inefficient use of loops can dramatically increase processing time, especially when dealing with large datasets. Similarly, excessive memory allocation and deallocation without proper garbage collection can lead to memory leaks and slowdowns. Another common problem is the overuse of recursive functions without proper base cases, potentially leading to stack overflow errors and application crashes.

Failing to optimize database queries can also result in significantly increased query times. Consider a scenario where a developer iterates through a list of 10,000 items using a nested loop; this O(n^2) complexity will be far slower than an optimized O(n) approach.

Impact of Poorly Designed Algorithms

The choice of algorithm significantly impacts resource consumption. Using an inefficient algorithm to sort a large dataset can result in unacceptably long processing times. For instance, a bubble sort algorithm, which has O(n^2) time complexity, will perform significantly worse than a merge sort (O(n log n)) or quicksort (average O(n log n)) for large datasets. Similarly, inefficient search algorithms, such as a linear search (O(n)), can be dramatically slower than a binary search (O(log n)) when dealing with sorted data.

This difference in performance becomes especially noticeable as the size of the dataset increases. Imagine searching a phone book: a linear search would require checking each entry, while a binary search would cut the search space in half with each comparison.

Role of External Factors

External factors, such as network latency and unreliable network connections, play a crucial role in the perceived busyness of software. Applications that rely heavily on network communication, like web applications or cloud-based services, are particularly susceptible. High latency, caused by slow internet connections or overloaded servers, can lead to noticeable delays in loading data or responding to user input.

For example, a web application that relies on multiple API calls to render a single page might experience significant slowdowns if any of these API calls are delayed due to network issues. This is exacerbated by the increasing reliance on cloud services and microservices architectures, where delays in one part of the system can cascade through the entire application.

Network outages, while less frequent, can completely halt the functionality of applications dependent on external resources.

Measuring Software Busyness

Objectively assessing software “busyness” requires a multi-faceted approach, combining quantitative measures of resource utilization with qualitative assessments of user experience. This allows for a more complete understanding of performance and responsiveness, going beyond simple metrics like CPU load.We need to move beyond simple heuristics and develop a robust system that accurately reflects the complexities of modern software. This involves a combination of automated monitoring and user feedback mechanisms to create a holistic view of software performance.

Resource Utilization Measurement

A system for objectively measuring software resource utilization should incorporate several key components. First, we need continuous monitoring of CPU usage, memory consumption, and disk I/O operations. This can be achieved through system-level tools like `top` (Linux/macOS) or Task Manager (Windows), or via dedicated application performance monitoring (APM) tools. These tools provide real-time data on CPU percentage, resident memory size, page faults, and disk read/write operations.

The data should be collected at regular intervals (e.g., every second or minute) and stored for later analysis. Analyzing trends over time is crucial for identifying performance bottlenecks. For example, a consistently high CPU usage percentage over a prolonged period might indicate an inefficient algorithm, while spikes in disk I/O could suggest excessive file access.

Quantifying User-Perceived Busyness

Measuring user-perceived busyness is more challenging than measuring resource utilization. Direct methods include user surveys and questionnaires asking users to rate the responsiveness of the application under different conditions. Indirect methods involve tracking metrics such as response times to user inputs (e.g., button clicks, keyboard actions), the frequency of freezes or hangs, and the time taken to complete common tasks.

These metrics can be correlated with resource utilization data to identify situations where high resource consumption translates into a poor user experience. For example, a small increase in CPU usage might be imperceptible to the user, while a large increase could lead to noticeable lag or freezes. A well-designed system would aggregate these metrics to provide a composite score representing the overall user experience.

Comparison of Metrics

Different metrics provide different perspectives on software performance and resource consumption. CPU usage provides a general indication of processing load, but doesn’t reveal the source of the load. Memory consumption indicates how much memory the application is using, but doesn’t show whether this is efficient or excessive. Disk I/O metrics reflect the amount of data being read and written, which can be crucial for identifying performance bottlenecks in database-intensive applications.

User-perceived busyness, though subjective, is a critical measure of overall application quality. A comprehensive assessment should consider all these metrics, and potentially others, to get a holistic view. For example, comparing a high CPU usage with a low user-perceived busyness might suggest that the application is optimized for its task, while high CPU usage coupled with poor responsiveness points towards a performance issue.

A simple example: Consider a video editing software. High CPU and memory usage are expected during rendering, but if this translates into an unresponsive interface, the user experience is negatively affected. The system should ideally correlate these various metrics to provide a more nuanced understanding of software performance.

Optimizing Software Performance

Optimizing software performance is crucial for combating “busyness,” that frustrating state where an application feels sluggish and unresponsive. This involves a multi-pronged approach targeting both computationally intensive operations and memory management. By focusing on efficiency in these areas, developers can significantly improve the user experience and overall application responsiveness.

Improving Efficiency of Computationally Intensive Tasks

Computationally intensive tasks, such as complex calculations or image processing, can significantly impact software performance. Strategies to improve their efficiency often involve algorithmic optimization and parallelization techniques. Algorithmic improvements focus on reducing the number of operations required to complete a task, while parallelization leverages multiple processing cores to perform computations concurrently.

Reducing Memory Usage in Software Applications

Excessive memory usage is another common cause of software busyness. Applications that consume large amounts of memory can lead to swapping, where the operating system moves data between RAM and the hard drive, resulting in significant performance slowdowns. Effective memory management involves techniques like efficient data structures, memory pooling, and avoiding unnecessary object creation.

Code Optimization Strategies

Several code optimization strategies can directly address busyness. One example is using more efficient data structures. For instance, using a hash table instead of a linked list for frequent lookups can dramatically reduce execution time. Another strategy is to avoid redundant calculations by caching frequently accessed data. This prevents repeated computation of the same values.

Consider the example of a Fibonacci sequence calculator: instead of recalculating each Fibonacci number from scratch, store previously calculated numbers in a cache (e.g., a dictionary or array) to retrieve them instantly when needed. This simple optimization can greatly improve performance for larger Fibonacci numbers. Finally, profiling tools can pinpoint performance bottlenecks within your code, guiding optimization efforts to the areas that yield the most significant improvements.

These tools provide detailed information on function execution times and memory usage, allowing developers to identify and address performance issues effectively.

User Interface Considerations

When dealing with busy software, the user interface (UI) is crucial. A poorly designed UI can amplify the feeling of sluggishness, frustrating users and undermining the software’s perceived value, even if the underlying performance is actually quite good. A well-designed UI, however, can mitigate the perception of slowness and keep users engaged and informed throughout lengthy processes. Effective communication is key.A well-designed UI for busy software needs to proactively address user anxiety by providing clear, consistent feedback.

This goes beyond simply displaying a progress bar; it’s about creating a sense of trust and control. Users need to know the software is working and, more importantly,how* it’s working. This involves carefully choosing the right visual cues and feedback mechanisms.

Juggling a million things with busy software is a total drag, right? Finding a CRM that actually helps, not hinders, is key. That’s where checking out monday crm comes in – it’s designed to streamline your workflow and make managing your contacts a breeze, so you can finally conquer that overflowing to-do list and reclaim some sanity amidst all your busy software.

Communicating Software Progress

Effective communication of software progress during busy operations requires more than just a generic “processing…” message. Imagine a complex video rendering application. Instead of a simple progress bar, the UI could display the current rendering stage (e.g., “Rendering scene 3 of 10”), the estimated time remaining, and even a visual representation of the rendered portion of the video.

This provides a much richer and more informative picture than a simple percentage complete. Consider also including visual cues like a spinning animation or a subtle progress bar that changes color to indicate different phases of the process. These elements work together to reassure the user that something is happening and provide a sense of predictability.

Feedback Mechanisms for Reassurance

Reassuring users that the software is working, even during high activity, requires a multi-pronged approach. Simple, subtle animations, such as a gently pulsing icon or a subtly changing progress bar, can help convey a sense of ongoing activity. Avoiding unresponsive interfaces is critical; even small visual cues like cursor changes can make a big difference. Furthermore, avoid using generic error messages.

Instead, provide specific, actionable feedback if a problem occurs. For instance, instead of “Error,” a more helpful message might be “Network connection lost. Please check your internet connection and try again.” This proactive communication fosters trust and reduces user frustration.

Clear and Concise Progress Indicators

Progress indicators should be clear, concise, and easily understandable. Avoid technical jargon or ambiguous language. For example, a progress bar showing “75% complete” is far more effective than “Processing data segment 3 of 4, sub-process B, phase 2.” The former is immediately understandable, while the latter is likely to confuse the average user. The use of clear labels and units (e.g., “Files processed: 12/20”) can greatly improve clarity.

Visual cues like color-coding or animation can also enhance understanding and provide additional context. The goal is to provide users with enough information to understand what’s happening without overwhelming them with unnecessary detail.

Impact on User Experience

Slow or unresponsive software significantly impacts user satisfaction, leading to frustration and a negative perception of the product or service. This impact extends beyond simple annoyance; it can directly affect user productivity, loyalty, and even the bottom line for businesses relying on the software. Understanding the relationship between software performance and user experience is crucial for developers and designers aiming to create successful applications.Software busyness, manifested as lag, freezes, or unexpected delays, directly undermines user productivity and workflow.

Imagine a graphic designer whose design software constantly freezes, interrupting their creative flow and causing them to lose precious time. Or a financial analyst whose spreadsheet program struggles to handle large datasets, delaying critical reports and potentially impacting investment decisions. These scenarios highlight how performance issues translate into real-world consequences, affecting not just individual users but also organizational efficiency.

Negative Effects on User Satisfaction

Poorly performing software leads to a decline in user satisfaction. Users become frustrated with slow loading times, unresponsive interfaces, and unexpected crashes. This frustration can manifest in several ways, including negative reviews, reduced engagement with the software, and ultimately, abandonment of the application in favor of a competitor. A study by [insert reputable source and findings here, e.g., a Forrester report on user experience and software performance] showed a strong correlation between software responsiveness and user satisfaction ratings.

The faster the software, the happier the users. For example, if a user needs to complete a task in a specific timeframe, slow response times can cause them to miss deadlines or compromise the quality of their work. The resulting stress and inefficiency directly impact their overall satisfaction with the software.

Impact on Productivity and Workflow

Software busyness directly impacts user productivity and workflow efficiency. When software is slow or unresponsive, users spend more time waiting for tasks to complete, disrupting their concentration and flow. This wasted time can significantly reduce overall productivity. For instance, a software developer spending 15 minutes waiting for a program to compile each time will lose significant time compared to a developer using a faster compiler.

The cumulative effect of these delays can significantly impact project timelines and deadlines, especially in collaborative environments where multiple users rely on the same software. The disruption to workflow also leads to increased stress and reduced job satisfaction among users. Consider a customer service representative whose CRM system is constantly lagging; they’ll spend more time navigating the system and less time helping customers, resulting in lower customer satisfaction and potential revenue loss.

Strategies for Mitigating Negative User Experiences

Several strategies can mitigate the negative user experiences associated with software busyness. These strategies focus on improving software performance, providing clear feedback to users, and designing intuitive interfaces. Proactive performance monitoring and optimization are crucial. This includes regular testing and analysis to identify and address performance bottlenecks. Implementing features like progress indicators and loading animations provides users with visual feedback, reducing the perception of waiting time.

Furthermore, designing user interfaces that are efficient and intuitive minimizes the number of steps users need to take to complete tasks, thus improving overall experience. For example, using asynchronous loading techniques can prevent the entire interface from freezing while loading large datasets. Providing clear and concise error messages also helps users understand and resolve issues more efficiently.

A well-designed help system can guide users and reduce their frustration when encountering problems.

Software Architecture and Busyness

Software architecture significantly impacts a program’s perceived “busyness.” The way a system is structured directly affects its responsiveness, resource utilization, and overall user experience. Choosing the right architecture is crucial for building applications that feel fast and efficient, rather than sluggish and unresponsive.

Different architectural styles have distinct performance characteristics. A monolithic architecture, where all components are tightly coupled within a single application, can become a bottleneck as the application grows. Microservices, on the other hand, break down the application into smaller, independent services, offering potential advantages in scalability and maintainability, but also introducing complexities of their own.

Monolithic Architecture Performance

Monolithic architectures, while simpler to initially develop and deploy, can suffer from performance degradation as they grow larger. Any change or update requires rebuilding and redeploying the entire application, leading to downtime and potential disruptions. A single point of failure can bring down the whole system. Moreover, resource contention between different modules within the monolith can lead to performance bottlenecks and slowdowns, increasing the perceived busyness for the user.

Imagine a large e-commerce website built as a monolith; a surge in traffic could easily overwhelm the entire system, leading to slow loading times and frustrated users.

Microservices Architecture Performance

Microservices architectures aim to alleviate many of the issues found in monolithic systems. By decomposing the application into independent services, updates and deployments can be performed more granularly, minimizing downtime. Each service can be scaled independently based on its specific needs, optimizing resource utilization. However, microservices introduce complexities in terms of inter-service communication, data consistency, and overall system management.

The overhead of managing many smaller services can negate some of the performance benefits if not carefully planned and implemented. For example, excessive network calls between microservices can introduce latency, resulting in a slower user experience despite the individual services being highly performant.

Architectural Choices and Perceived Busyness

The choice between monolithic and microservices architectures significantly influences the perceived busyness of an application. A well-designed microservices architecture can result in a more responsive application, especially under heavy load, as individual services can scale independently. However, a poorly designed microservices architecture, with excessive inter-service communication or inefficient resource allocation, can be even slower and more unresponsive than a well-maintained monolith.

The key is to choose the architecture that best suits the application’s specific needs and scale expectations, carefully considering the trade-offs between complexity and performance.

Modular Design and Software Responsiveness

Modular design is a crucial principle for building responsive software, regardless of the chosen architecture. By breaking down the application into smaller, independent modules with well-defined interfaces, developers can improve code maintainability, reduce complexity, and enhance performance. Modules can be developed, tested, and deployed independently, accelerating the development process and reducing the risk of introducing bugs. Furthermore, modularity allows for better resource allocation and optimization, preventing resource contention and improving overall responsiveness.

A modular design makes it easier to identify and fix performance bottlenecks, leading to a smoother and less “busy” user experience. For instance, a module responsible for data visualization can be optimized separately from a module handling user authentication, improving the overall performance of the application without impacting other functionalities.

Testing for Busyness

Identifying and mitigating performance bottlenecks in busy software requires a robust testing strategy. This involves a combination of planned tests, automated monitoring, and clear reporting to pinpoint areas needing optimization. The goal is to create a detailed performance profile of the software under various loads and usage scenarios.

A well-structured test plan is crucial for effectively measuring software busyness. This plan should define specific tests targeting different aspects of the software, from individual modules to the entire system. Automated tests are essential for continuous monitoring and efficient analysis, providing repeatable and objective data. Finally, a concise report summarizing the test results allows for efficient identification and prioritization of optimization efforts.

Test Plan for Identifying Performance Bottlenecks

A comprehensive test plan should cover a range of scenarios, simulating real-world usage patterns. This includes testing under various load conditions, from low to high user activity. We need to consider different types of operations, such as data retrieval, complex calculations, and user interface interactions. The plan should also specify the metrics to be collected, such as response times, CPU utilization, memory usage, and network throughput.

For example, a test might involve simulating 100 concurrent users accessing a specific feature and measuring the average response time. Another test could focus on the impact of large data sets on database query performance.

Automated Tests for Resource Utilization Monitoring

Automated tests are invaluable for continuous monitoring of resource utilization during software operations. Tools like JMeter or LoadRunner can simulate various user loads and monitor key metrics in real-time. These tests should be integrated into the continuous integration/continuous delivery (CI/CD) pipeline to ensure early detection of performance issues. For instance, an automated test could be set up to run every night, simulating peak user load and generating a report on CPU and memory usage.

Another example would be automated tests triggered after each code commit to check for regressions in performance.

Performance Testing Report

A clear and concise report is essential for communicating the results of performance testing. The report should include a summary of the testing methodology, the results, and recommendations for improvement. The use of visual aids, such as charts and graphs, can enhance the clarity and impact of the report. Below is an example of an HTML table that can be used to display key performance metrics.

| Test Case | Average Response Time (ms) | CPU Utilization (%) | Memory Usage (MB) |

|---|---|---|---|

| Login Process | 250 | 15 | 50 |

| Data Retrieval (Small Dataset) | 100 | 5 | 20 |

| Data Retrieval (Large Dataset) | 1500 | 75 | 200 |

| Complex Calculation | 3000 | 90 | 150 |

Case Studies of Busy Software

Software performance issues are a common problem, impacting everything from user experience to overall business success. Understanding how these issues manifest and how they’re resolved is crucial for building robust and efficient applications. This section delves into a real-world example, detailing the challenges, solutions, and lessons learned.

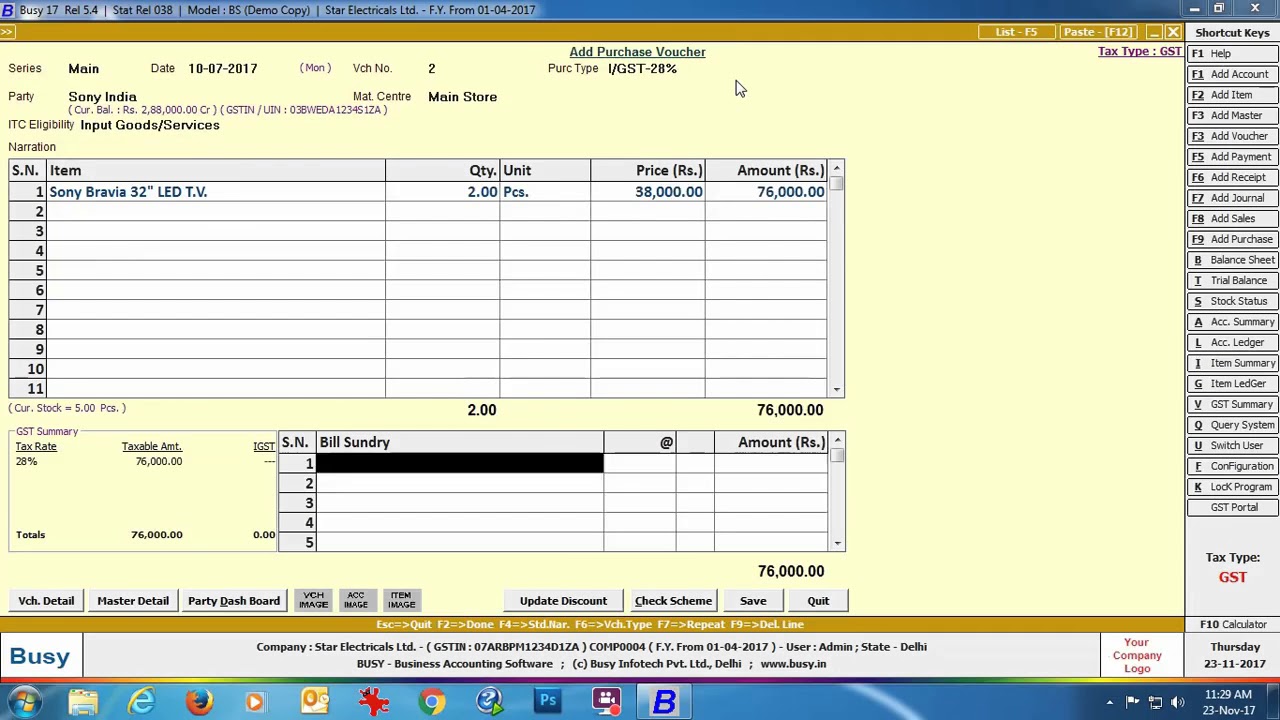

The Case of a High-Traffic E-commerce Platform, Busy software

This case study focuses on a large e-commerce platform that experienced significant performance degradation during peak shopping seasons. The platform, which handled millions of transactions daily, suffered from slow load times, frequent crashes, and ultimately, lost sales. The core issue stemmed from a combination of factors, highlighting the complexity of diagnosing performance bottlenecks in large-scale applications.

Diagnosing the Performance Problems

The initial diagnosis involved a multi-pronged approach. Performance monitoring tools were used to identify bottlenecks in the system. This included analyzing database queries, identifying slow API calls, and pinpointing resource contention. Profiling tools helped to pinpoint specific code sections that were consuming excessive resources. Load testing was crucial in simulating peak traffic conditions to replicate and understand the observed issues under stress.

The team discovered several key problems: inefficient database queries that were causing significant delays, a lack of sufficient caching mechanisms leading to repeated computations, and inadequate scaling of the application servers to handle the surge in traffic.

Resolving the Performance Issues

Addressing the identified issues involved a combination of strategies. Database queries were optimized by adding indexes, rewriting inefficient queries, and implementing connection pooling. Caching mechanisms were significantly improved by implementing a robust caching strategy at multiple levels, reducing the load on the database and speeding up response times. The application servers were scaled horizontally by adding more instances to the server farm, distributing the load effectively.

Furthermore, the team implemented a more sophisticated load balancing system to ensure even distribution of traffic across the servers. Finally, they implemented comprehensive monitoring and alerting systems to provide early warnings of potential performance issues.

Lessons Learned and Future Applications

This experience underscored the importance of proactive performance monitoring and testing. The team learned that relying solely on reactive measures (fixing problems only after they occur) is insufficient for maintaining a high-performing application. Proactive performance testing throughout the development lifecycle, coupled with rigorous monitoring in production, is vital. The team also recognized the significance of careful database design and optimization.

Efficient queries and appropriate indexing are crucial for handling large datasets. Finally, this case highlights the necessity of planning for scalability from the outset. Designing applications with scalability in mind, through appropriate architecture choices and infrastructure provisioning, prevents performance issues as the application grows. These lessons can be directly applied to future projects by incorporating proactive performance testing into the Agile development process, adopting robust monitoring practices, and prioritizing efficient database design and scalability considerations.

End of Discussion

So, next time your software starts acting up, remember this: it’s not just a matter of a few extra seconds. Slow software translates to lost productivity, frustrated users, and potentially, lost business. By understanding the causes of software “busyness,” employing effective measurement techniques, and implementing smart optimization strategies, we can build better, faster, and more enjoyable software for everyone.

Let’s ditch the spinning wheels and embrace the smooth, responsive digital experience we deserve!

FAQ Overview

What’s the difference between “busy” software and software with a high CPU usage?

High CPU usage is a

-cause* of perceived “busyness,” but not the only one. Software might feel sluggish due to slow disk I/O, network latency, or inefficient algorithms even if CPU usage isn’t excessively high. “Busyness” is the user’s perception of slow responsiveness.

Can I use free tools to measure software busyness?

Yes! Many free tools, like Task Manager (Windows) or Activity Monitor (macOS), provide basic metrics like CPU, memory, and disk usage. More advanced profiling tools often have free versions with limitations.

How can I tell if my software’s slowness is due to my hardware or the software itself?

Try running other demanding applications. If they also run slowly, the problem might be your hardware. If other apps are fine, but one specific app is slow, it’s likely a software issue.

Is there a “magic bullet” for fixing all busy software?

Unfortunately, no. Optimizing software performance often requires a multi-faceted approach, addressing issues in code, algorithms, and the user interface. Careful analysis and testing are key.